Sebastian Raschka, the renowned AI researcher behind the influential "Ahead of AI" newsletter, has just published a comprehensive analysis of modern LLM architectures that every AI professional should read. With over 127,000 subscribers following his insights, Raschka's latest piece examines how large language model architectures have evolved from the original GPT to today's cutting-edge models like DeepSeek-V3 and the newly released OpenAI gpt-oss models.

As NeuroCluster continues to develop our European AI infrastructure with models like Supernova 2, understanding these architectural innovations is crucial for staying at the forefront of AI development. Let's dive into the key insights from Raschka's analysis and what they mean for the future of AI.

Key Insight

Despite seven years of development since the original GPT, modern LLMs like DeepSeek-V3 and Llama 4 remain structurally similar to their predecessors. The real innovation lies in efficiency optimizations and specialized components rather than fundamental architectural overhauls.

DeepSeek-V3: Leading the Efficiency Revolution

DeepSeek-V3's MoE architecture showing the transformation from standard FeedForward to multiple expert layers

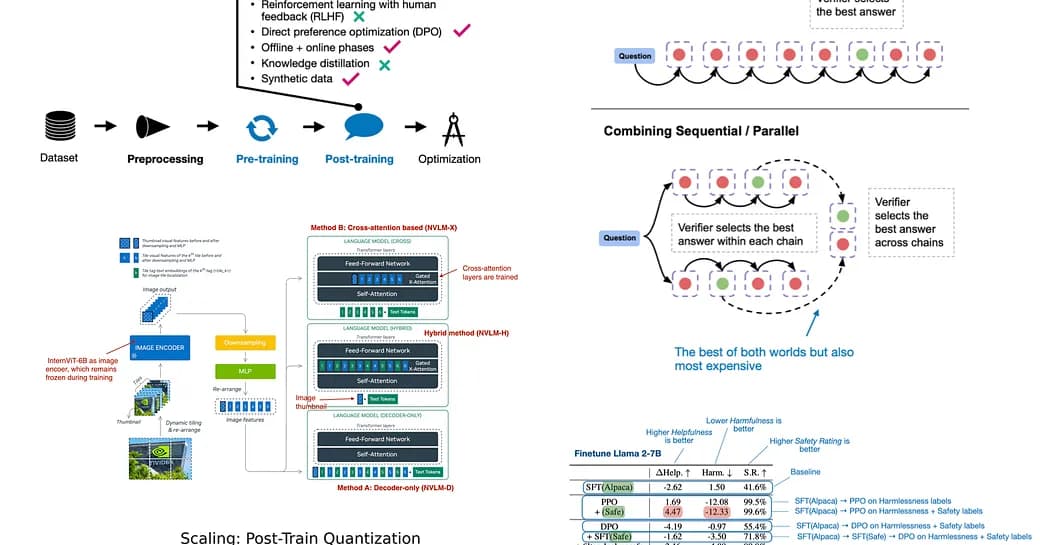

Raschka highlights two groundbreaking techniques in DeepSeek-V3 that significantly improve computational efficiency:

Multi-Head Latent Attention (MLA)

Comparison between Multi-Head Attention (MHA) and Grouped-Query Attention (GQA) showing key and value sharing

While most models have adopted Grouped-Query Attention (GQA) to reduce memory usage, DeepSeek took a different approach with Multi-Head Latent Attention. Instead of sharing key and value heads like GQA, MLA compresses these tensors into a lower-dimensional space before storing them in the KV cache.

Multi-Head Latent Attention (MLA) used in DeepSeek V3 compared to regular Multi-Head Attention (MHA)

Why MLA Matters for European AI

For NeuroCluster's European AI infrastructure, MLA's efficiency gains are particularly relevant. The technique not only reduces memory bandwidth usage but actually outperforms standard Multi-Head Attention in modeling performance—a rare win-win in AI architecture design.

Performance comparison showing MLA outperforming both MHA and GQA (Source: DeepSeek-V2 paper)

Mixture-of-Experts (MoE) Renaissance

The resurgence of MoE architectures in 2025 represents a shift toward sparse computation. By replacing single FeedForward blocks with multiple expert layers, models can dramatically increase their parameter count while maintaining efficient inference through selective expert activation.

Architecture Trends Shaping 2025

Raschka's analysis reveals several key trends emerging across modern LLM architectures:

Efficiency First

From RoPE positional embeddings to SwiGLU activations, every component is being optimized for computational efficiency without sacrificing performance.

Sparse Computation

MoE architectures are becoming the norm, allowing models to scale parameters while maintaining reasonable inference costs through selective activation.

OpenAI's Return to Open Source

Architecture overview of OpenAI's gpt-oss-20b and gpt-oss-120b models showing their design choices

In a surprising development, OpenAI released their first open-weight models since GPT-2 with gpt-oss-20b and gpt-oss-120b. Raschka's analysis reveals some interesting architectural choices:

- Width over Depth: Choosing wider architectures with fewer layers for better parallelization

- Fewer, Larger Experts: Using 32 experts instead of the trend toward many smaller experts

- Attention Innovations: Implementing attention sinks and bias units for improved stability

Implications for European AI Development

These architectural developments have significant implications for NeuroCluster's European AI strategy:

Strategic Considerations

Efficiency Alignment

The focus on computational efficiency aligns perfectly with European sustainability goals and cost-effective AI deployment across diverse business environments.

Sovereignty Benefits

Understanding these architectural patterns enables European developers to build competitive models while maintaining data sovereignty and regulatory compliance.

Innovation Opportunities

The diversity in architectural approaches suggests there's still significant room for innovation, particularly in efficiency and specialization for European use cases.

Looking Ahead

As Raschka concludes in his analysis, "After all these years, LLM releases remain exciting, and I am curious to see what's next!" This sentiment perfectly captures the current state of AI development—we're in a period of rapid architectural experimentation and optimization.

For European AI development, this means opportunities to:

- Leverage efficiency innovations for sustainable AI deployment

- Develop specialized architectures for European regulatory requirements

- Build upon open-source innovations while maintaining competitive advantages

Source & Attribution

This analysis is based on Sebastian Raschka's comprehensive article "The Big LLM Architecture Comparison" published in his "Ahead of AI" newsletter, which has over 127,000 subscribers in the AI research community.

Read the full articleConclusion

The LLM architecture landscape in 2025 is characterized by intelligent optimization rather than revolutionary change. As Sebastian Raschka's analysis demonstrates, the focus has shifted from purely scaling parameters to achieving better efficiency, specialization, and practical deployment characteristics.

For NeuroCluster and the broader European AI ecosystem, these insights provide a roadmap for developing competitive, efficient, and sustainable AI systems that can serve European businesses while maintaining sovereignty and compliance with evolving regulations.

Stay tuned to the NeuroCluster blog for more insights on AI architecture developments and their implications for European AI strategy.